Web Scraping with Python: A Comprehensive Guide to Extracting Data from the Web

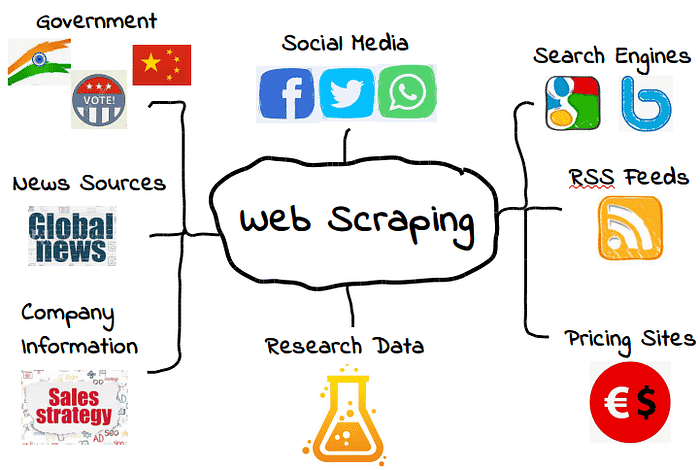

Web scraping is a method of automating extraction of data from websites, using code to retrieve and parse HTML and store necessary data in structured form for future use. It involves gathering specific information from various websites like news articles, blogs, product details or social media posts. This method is useful for data analysis and competitive intelligence.

One of the most popular library in Python for web scraping is BeautifulSoup known as bs4. It makes it easy to extract content from HTML and XML websites using its built-in methods.

Using BeautifulSoup in Python

1. Install BeautifulSoup

Before getting started, make sure you have BeautifulSoup installed. You can install it using pip by running the following command in your terminal:

pip install beautifulsoup42. Import Necessary modules

Import request module in your python file to make HTTP requests and bs4 module to parse HTML content.

import requests

from bs4 import BeautifulSoup3. Make HTTP request

Use requests library to make HTTP Get requests to the website and save it to a variable.

url = "https://example.com"

response = requests.get(url)4. Parse HTML content

Use BeautifulSoup class to create a soup object and provide content of response and parser type as arguments.

soup = BeautifulSoup(response.content, "html.parser")5. Extract data from HTML

Use methods of soup object to find the data you need and use the data however you intend to. You can even save the data to the file for future use.

Let’s suppose that this program intends to scrape all website links inside a web page and print them in console.

links=soup.find_all('a') # find all anchor tags

for link in links:

href=link.get('href') # get value of href from each link/anchor tag

print(href)6. Storing extracted data

Once you get data, you can clean it and manipulate it however you like inside your code. The next thing you can do is to store them for future processing.

with open('links.txt','w') as file:

for link in links:

href=link.get('href') # get value of href from each link/anchor tag

file.write(href + '\n') # new line added to structure the links textMethods of BeautifulSoup

1. find (tag)

This method is used to find the tag using tag name inside HTML content. It only returns a single item. It returns the first tag only.

# Example: Find the first <h1> tag

h1_tag = soup.find("h1")2. find_all (tag)

Similar to find method, this method is used to find the tags using tag name inside HTML content. It returns all tags matching the given tag name.

# Example: Find all <h1> tag

h1_tags = soup.find_all("h1")

# Example: Find all <h1> tag with title class

title_h1_tags = soup.find_all("h1",class_='title')3. select (tag)

This method is used to find the tag using CSS selectors like tag name, id and classes. It returns a list of all HTML tags that matches the given selector.

# Example: Find all <h1> tag with title class

title_h1_tag = soup.select("h1.title")4. get (tag)

This method is used to get the attribute of the retrieved tag like class, id, href, name, etc. It returns None value if attribute doesn’t exist.

# returns link

href=link.get("href")

# returns link if exists otherrwise returns not found

href=link.get("href","not found")5. text

This method gives text value of HTML tag, excluding HTML tags and attributes.

paragraph=soup.find('p')

text=paragraph.text # Output: This is a paragraph.

6. parent

This method gives the parent element of the element.

paragraph=soup.find('p')

text=paragraph.parent # Output: divThese are some basic methods in this library to extract data from websites. You can read the official documentation to get more information: https://www.crummy.com/software/BeautifulSoup/bs4/doc/

In Plain English

Thank you for being a part of our community! Before you go:

- Be sure to clap and follow the writer! 👏

- You can find even more content at PlainEnglish.io 🚀

- Sign up for our free weekly newsletter. 🗞️

- Follow us on Twitter(X), LinkedIn, YouTube, and Discord.